안녕하세요.

Plain Network(단순히 Layer을 깊게 쌓음)에서 발생하는 Vanishing Gradient(기울기 소실), Overfitting(과적합) 등의 문제를 해결하기 위해 ReLU, Batch Nomalization 등 많은 기법이 있습니다.

ILSVRC 대회에서 2015년, 처음으로 Human Recognition보다 높은 성능을 보인 것이 ResNet입니다.

그 위용은 무지막지한 논문 인용 수로 확인할 수 있습니다.

그렇기 때문에 ResNet은 딥러닝 이미지 분야에서 바이블로 통하고 있습니다.

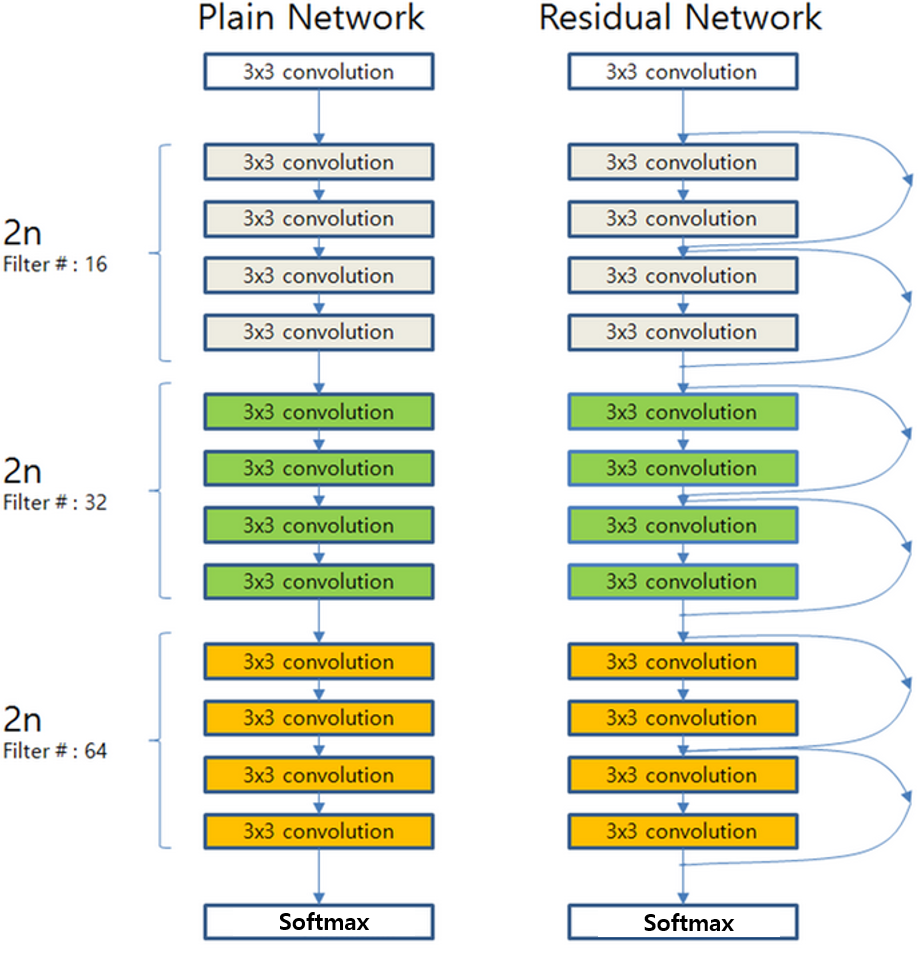

Plain Network는 단순히 Convolution 연산을 단순히 쌓는다면, ResNet은 Block단위로 Parameter을 전달하기 전에 이전의 값을 더하는 방식입니다.

F(x) : weight layer => relu => weight layer

x : identity

weight layer들을 통과한 F(x)와 weight layer들을 통과하지 않은 x의 합을 논문에서는 Residual Mapping 이라 하고, 그림의 구조를 Residual Block이라 하고, Residual Block이 쌓이면 Residual Network(ResNet)이라고 합니다.

Residual Mapping은 간단하지만, Overfitting, Vanishing Gradient 문제가 해결되어 성능이 향상됐습니다.

그리고 다양한 네트워크 구조에서 사용되며, 2017년 ILSVRC을 우승한 SeNet에서 사용됩니다. ( 이 글을 쓴 이유이기도 합니다. )

class Residual_Block(nn.Module):

def __init__(self, in_dim, mid_dim, out_dim):

super(Residual_Block,self).__init__()

# Residual Block

self.residual_block = nn.Sequential(

nn.Conv2d(in_dim, mid_dim, kernel_size=3, padding=1),

nn.ReLU,

nn.Conv2d(mid_dim, out_dim, kernel_size=3, padding=1),

)

self.relu = nn.ReLU()

def forward(self, x):

out = self. residual_block(x) # F(x)

out = out + x # F(x) + x

out = self.relu(out)

return out

그리고 Residual Block 소개 후 BottleNeck이 나옵니다. 아래 글을 참고하시면 좋을 것 같습니다.

coding-yoon.tistory.com/116?category=825914

[딥러닝] DeepLearning CNN BottleNeck 원리(Pytorch 구현)

안녕하세요. 오늘은 Deep Learning 분야에서 CNN의 BottleNeck구조에 대해 알아보겠습니다. 대표적으로 ResNet에서 BottleNeck을 사용했습니다. ResNet에서 왼쪽은 BottleNeck 구조를 사용하지 않았고, 오른쪽은..

coding-yoon.tistory.com

ResNet 원문

Deep Residual Learning for Image Recognition

Deeper neural networks are more difficult to train. We present a residual learning framework to ease the training of networks that are substantially deeper than those used previously. We explicitly reformulate the layers as learning residual functions with

arxiv.org

'🐍 Python > Deep Learning' 카테고리의 다른 글

| 주피터 노트북 개인 딥러닝 서버 만들기 ! (1) with Window10, Pytorch (6) | 2021.07.14 |

|---|---|

| [딥러닝] Pytorch. Target n is out of bounds. (1) | 2021.03.26 |

| [무선 통신] UWB LOS/NLOS Classification Using Deep Learning Method (2) (0) | 2021.03.10 |

| [Pytorch] RNN에서 Tanh말고 Sigmoid나 ReLU를 사용하면 안될까? (1) | 2021.02.04 |

| [Pytorch] LSTM을 이용한 삼성전자 주가 예측하기 (35) | 2021.02.02 |