안녕하세요. 오늘은 Xception 리뷰 세번 째 시간입니다.

1. The Xception architecture

in particular the VGG-16 architecture , which is schematically similar to our proposed architecture

in a few respects.

특히 VGG-16계층은 몇 가지 측면에서 Xception 계층과 개략적으로 유사합니다.

The Inception architecture family of convolutional neural networks, which first demonstrated the

advantages of factoring convolutions into multiple branches operating successively on channels and then on

space.

Inception 모듈이 여러가지 방향으로 채널과 공간에서 작동하는 장점을 소개하고 있다는 내용입니다.

Depthwise separable convolutions, which our proposed architecture is entirely based upon. ~~

앞 쪽에서 이야기한 Depthwise Separable Convolution의 연산량 감소로 인한 속도 증가의 장점을 소개하고

있습니다.[딥러닝] Depthwise Separable Covolution with Pytorch( feat. Convolution parameters VS Depthwise Separable Covolution paramet

안녕하세요. Google Coral에서 학습된 모델을 통해 추론을 할 수 있는 Coral Board & USB Accelator 가 있습니다. 저는 Coral Board를 사용하지 않고, 라즈베리파이4에 USB Accelator를 연결하여 사용할 생각입니..

coding-yoon.tistory.com

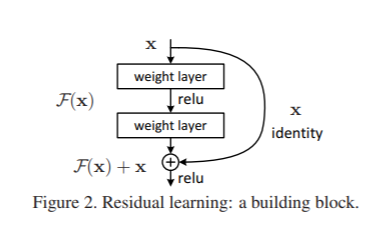

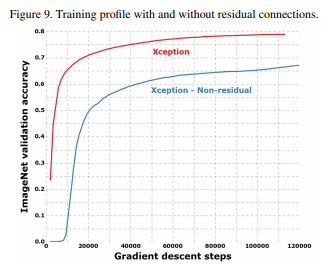

Residual connections, introduced by He et al. in [4], which our proposed architecture uses extensively.

Resnet에서 사용하는 Residual Connectionshttps://openaccess.thecvf.com/content_cvpr_2016/papers/He_Deep_Residual_Learning_CVPR_2016_paper.pdf

Residual Connections를 짚고 넘어가자면, Resnet의 배경은 히든레이어가 증가함에 따라 학습이 더 잘되어야 하지만, 오히려 학습을 못하는 현상이 발생합니다. 이 현상이 vanishing/exploding gradients (기울기 손실) 문제입니다. Batch Normalization으로 어느정도 해결할 수 있지만, 근본적인 문제를 해결할 수 없습니다. 그래서 고안된 방법이 Residual Connection(mapping) 입니다. 이전의 값을 더해줌으로 기울기 손실을 방지하는 것입니다. 간단한 방법이지만 효과는 굉장히 좋습니다.

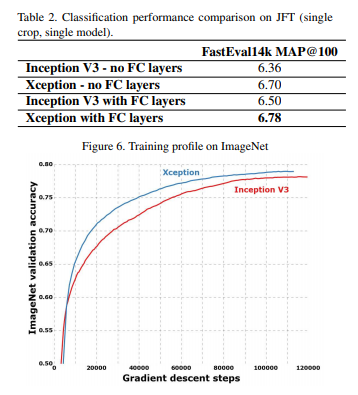

dataset은 FastEval14K를 사용하였습니다. (299x299x3)

2. implemention

2-1 depthwise separable convolution

def depthwise_separable_conv(input_dim, output_dim):

depthwise_convolution = nn.Conv2d(input_dim, input_dim, kernel_size=3, padding=1, groups=input_dim, bias=False)

pointwise_convolution = nn.Conv2d(input_dim, output_dim, kernel_size=1, bias=False)

model = nn.Sequential(

depthwise_convolution,

pointwise_convolution

)

return model2-2 Entry flow

class entry_flow(nn.Module):

def __init__(self):

super(entry_flow, self).__init__()

self.conv2d_init_1 = nn.Conv2d(in_channels = 3,

out_channels = 32,

kernel_size = 3,

stride = 2,

)

self.conv2d_init_2 = nn.Conv2d(in_channels = 32,

out_channels = 64,

kernel_size = 3,

stride = 1,

)

self.layer_1 = nn.Sequential(

depthwise_separable_conv(input_dim = 64, output_dim = 128),

nn.ReLU(),

depthwise_separable_conv(input_dim = 128, output_dim = 128),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.conv2d_1 = nn.Conv2d(in_channels = 64,

out_channels = 128,

kernel_size = 1,

stride = 2

)

self.layer_2 = nn.Sequential(

depthwise_separable_conv(input_dim = 128, output_dim = 256),

nn.ReLU(),

depthwise_separable_conv(input_dim = 256, output_dim = 256),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.conv2d_2 = nn.Conv2d(in_channels = 128,

out_channels = 256,

kernel_size = 1,

stride = 2

)

self.layer_3 = nn.Sequential(

depthwise_separable_conv(input_dim = 256, output_dim = 728),

nn.ReLU(),

depthwise_separable_conv(input_dim = 728, output_dim = 728),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.conv2d_3 = nn.Conv2d(in_channels = 256,

out_channels = 728,

kernel_size = 1,

stride = 2

)

self.relu = nn.ReLU()

def forward(self, x):

x = self.conv2d_init_1(x)

x = self.relu(x)

x = self.conv2d_init_2(x)

x = self.relu(x)

output1_1 = self.layer_1(x)

output1_2 = self.conv2d_1(x)

output1_3 = output1_1 + output1_2

output2_1 = self.layer_2(output1_3)

output2_2 = self.conv2d_2(output1_3)

output2_3 = output2_1 + output2_2

output3_1 = self.layer_3(output2_3)

output3_2 = self.conv2d_3(output2_3)

output3_3 = output3_1 + output3_2

y = output3_3

return y2-2 Middle flow

class middle_flow(nn.Module):

def __init__(self):

super(middle_flow, self).__init__()

self.module_list = nn.ModuleList()

layers = nn.Sequential(

nn.ReLU(),

depthwise_separable_conv(input_dim = 728, output_dim = 728),

nn.ReLU(),

depthwise_separable_conv(input_dim = 728, output_dim = 728),

nn.ReLU(),

depthwise_separable_conv(input_dim = 728, output_dim = 728)

)

for i in range(7):

self.module_list.append(layers)

def forward(self, x):

for layer in self.module_list:

x_temp = layer(x)

x = x + x_temp

return x2-3 Exit flow

class exit_flow(nn.Module):

def __init__(self, growth_rate=32):

super(exit_flow, self).__init__()

self.separable_network = nn.Sequential(

nn.ReLU(),

depthwise_separable_conv(input_dim = 728, output_dim = 728),

nn.ReLU(),

depthwise_separable_conv(input_dim = 728, output_dim = 1024),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.conv2d_1 = nn.Conv2d(in_channels = 728,

out_channels = 1024,

kernel_size = 1,

stride = 2

)

self.separable_conv_1 = depthwise_separable_conv(input_dim = 1024, output_dim = 1536)

self.separable_conv_2 = depthwise_separable_conv(input_dim = 1536, output_dim = 2048)

self.relu = nn.ReLU()

self.avgpooling = nn.AdaptiveAvgPool2d((1))

self.fc_layer = nn.Linear(2048, 10)

def forward(self, x):

output1_1 = self.separable_network(x)

output1_2 = self.conv2d_1(x)

output1_3 = output1_1 + output1_2

y = self.separable_conv_1(output1_3)

y = self.relu(y)

y = self.separable_conv_2(y)

y = self.relu(y)

y = self.avgpooling(y)

y = y.view(-1, 2048)

y= self.fc_layer(y)

return y2-4 Xception

class Xception(nn.Module):

def __init__(self):

super(Xception, self).__init__()

self.entry_flow = entry_flow()

self.middle_flow = middle_flow()

self.exit_flow = exit_flow()

def forward(self, x):

x = self.entry_flow(x)

x = self.middle_flow(x)

x = self.exit_flow(x)

return x

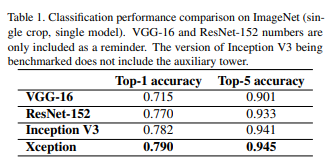

3. Experiment result

4. Conclusions

Depthwise Separable Convolution이 Inception 모듈과 유사하지만, Standard Convolution 만큼 사용하기 쉽고, 높은 성능과 연산량 감소의 장점 때문에 CNN의 설계의 기초가 될 것으로 기대가 됩니다. Xception 논문 리뷰를 마치도록 하겠습니다. 첫 논문 리뷰인지라 말하고자 하는 내용을 명확하게 설명하지 못하였습니다. 혹시라도 보시다가 잘못된 부분이나 추가해야할 부분이 보이시면 피드백 주시면 감사하겠습니다.